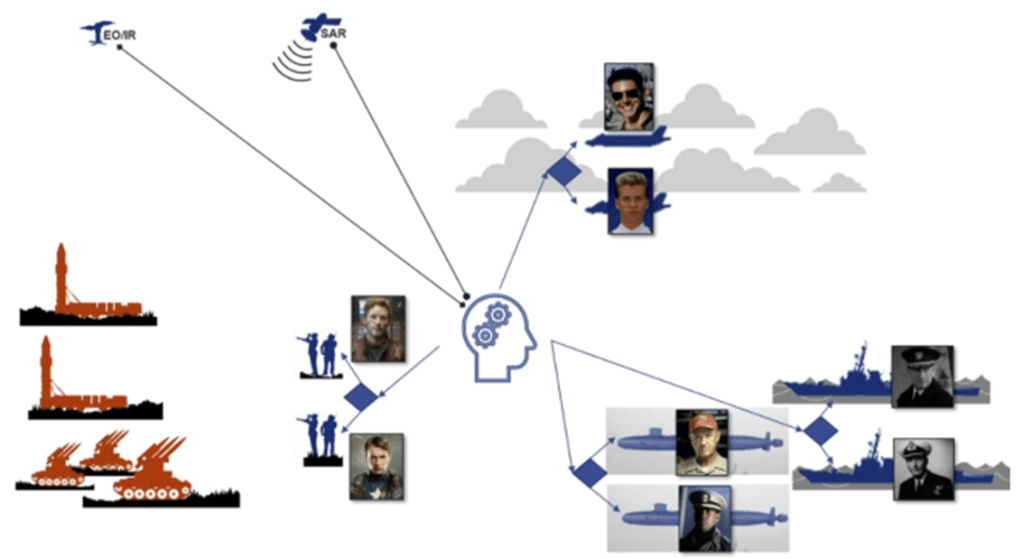

The research presented here builds on an existing multi-domain robotic teammate framework by exploring the bilateral nature of Human Machine Team (HMT) trust to optimize mission outcomes in a Mosaic-like warfare paradigm. Currently, the scientific community is focusing on measuring human trust with synthetic agent teammates, and the importance of building and sustaining well-calibrated trust to promote HMT interactions necessary for successful mission outcomes. Measuring trust objectively and in real time is a difficult problem, and solving it is essential to support a future warfare requiring rapidly reconfigurable multi-platform HMTs to address the mounting challenges of peer threats. However, the time sensitive nature of these reconfigurable, multi-domain, multi-platform kill webs assumes reliance on synthetic agents, driven by sophisticated Artificial Intelligence (AI), to make split-second decisions on how best to configure and reconfigure these kill webs. Those critical decisions will necessarily need to take into consideration the extent to which a synthetic agent can trust a human operator’s ability to complete mission tasks.

This paper explores and defines HMT relationships across synthetic agents’ personas within our existing multidomain robotic teammate framework to (a) identify human’s psychophysiological constraints across HMTs that can impact the synthetic agent’s trust in humans, and (b) promote the concept of a synthetic Mosaic agent that uses trust to rapidly assign tasks across HMTs to optimize mission outcomes. Theoretical findings are presented within an antisatellite strike and response applied use case to highlight the bilateral nature of trust in HMTs as a key enabler of mission success.

https://www.modsimworld.org/papers/2022/MODSIM_2022_paper_13.pdf